Jingjing Ren

|

|

Biography

I am a Ph.D. student in Robotics and Autonomous Systems at the Hong Kong University of Science and Technology (Guangzhou), supervised by Prof. Lei Zhu. I received my Master's degree in Computer Technology under the supervision of Prof. Xuemiao Xu and my B.E. in Computer Science (Elite Class) from the South China University of Technology.

My research primarily focuses on generative and enhancement models for generating high-quality visual content.

I am expected to graduate in 2026 and currently on the job market. Please feel free to contact me if interested.

Research Experience

- 05/2025 – Present: Research Intern at Adobe Research.

- 12/2023 – 05/2025: Research Intern at Huawei Noah’s Ark Lab.

- 05/2021 – 11/2021: Research Intern at Tencent WeChat.

News

- 07/2025: Three papers are accepted by ICCV 2025.

- 02/2025: One papers is accepted by CVPR 2025.

- 09/2024: Two papers are accepted by NeurIPS 2024.

Selected Publications

For the full list of publications, please visit my Google Scholar.

|

|

UltraPixel: Advancing Ultra-High-Resolution Image Synthesis to New Peaks Jingjing Ren*, Wenbo Li*, Haoyu Chen, Renjing Pei, Bin Shao, Yong Guo, Long Peng, Lei Zhu NeurIPS 2024 PDF / Webpage / Code (600 Star) / HF Demo |

|

Turbo2K: Towards Ultra-Efficient and High-Quality 2K Video Synthesis Jingjing Ren*,Wenbo Li, Zhongdao Wang, Haoze Sun, Bangzhen Liu, Haoyu Chen, Jiaqi Xu, Aoxue Li, Shifeng Zhang, Bin Shao, Yong Guo, Lei Zhu ICCV 2025 Paper / Webpage |

|

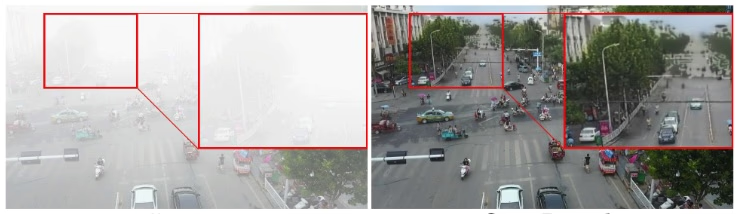

Triplane-Smoothed Video Dehazing with CLIP-Enhanced Generalization Jingjing Ren*, Haoyu Chen, Tian Ye, Hongtao Wu, Lei Zhu IJCV 2025 Paper |

|

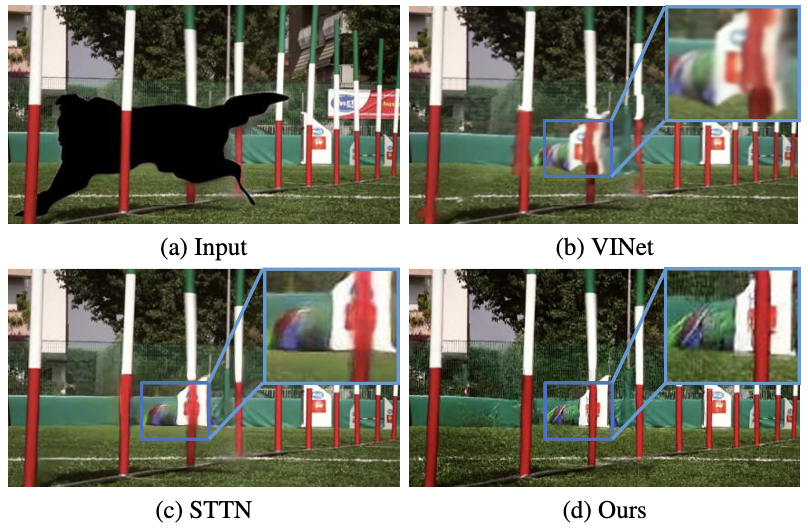

DLFormer: Discrete Latent Transformer for Video Inpainting Jingjing Ren*, Qingqing Zheng, Yuanyuan Zhao, Chen Li, Xuemiao Xu CVPR 2022 Paper / Code |

|

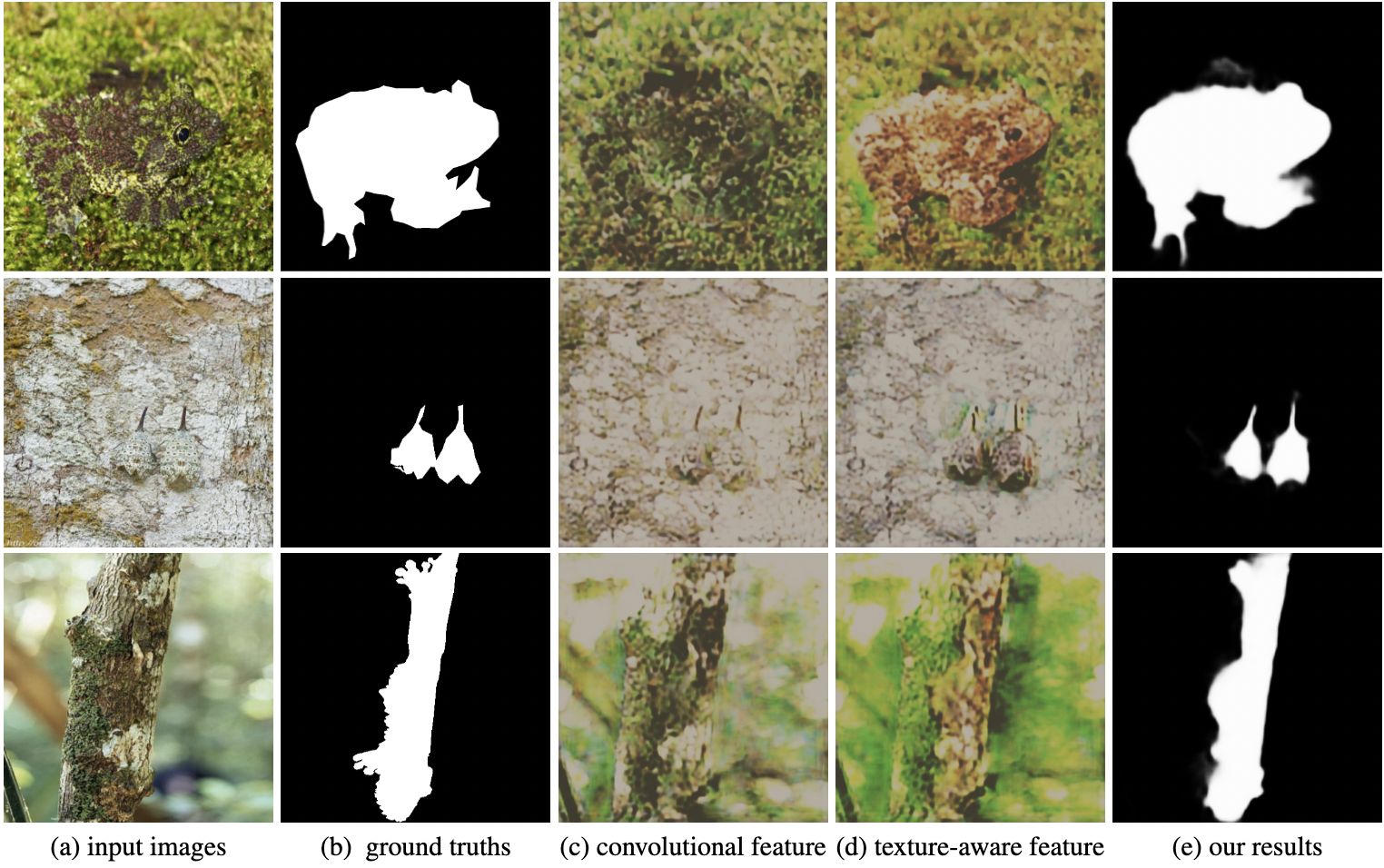

Deep texture-aware features for camouflaged object detection Jingjing Ren*, Xiaowei Hu, Lei Zhu, Xuemiao Xu, Yangyang Xu, Weiming Wang, Zijun Deng, Pheng-Ann Heng TCSVT 2023 Paper |

|

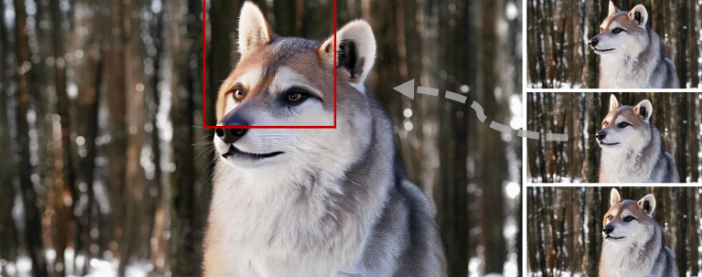

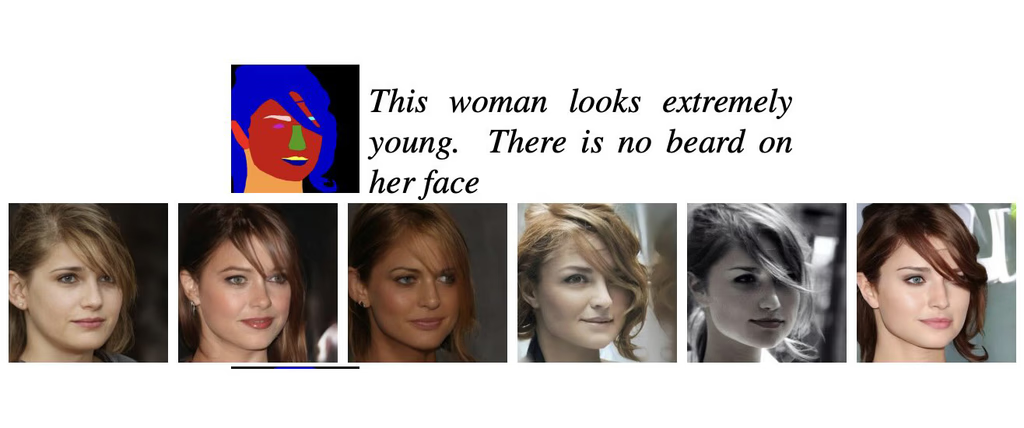

Towards Flexible, Scalable, and Adaptive Multi-Modal Conditioned Face Synthesis Jingjing Ren, Cheng Xu, Haoyu Chen, Xinran Qin, Lei Zhu Arxiv 2023 Paper / Webpage |

|

TurboVSR: Fantastic Video Upscalers and Where to Find Them Zhongdao Wang, Guodongfang Zhao, Jingjing Ren, Bailan Feng, Shifeng Zhang, Wenbo Li ICCV 2025 Highlight |

|

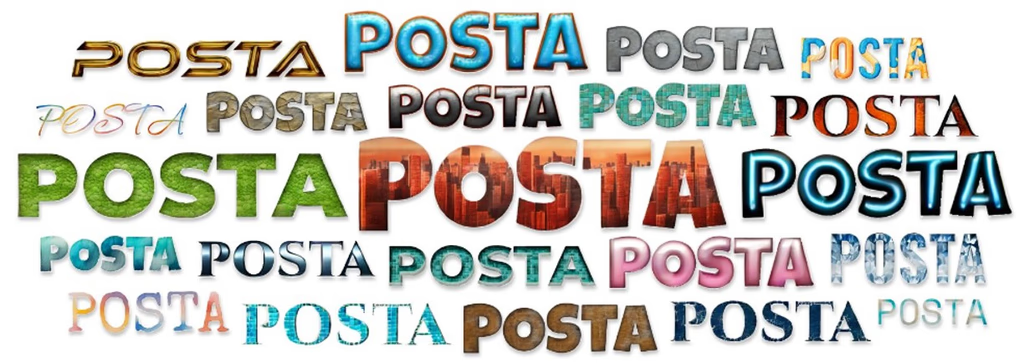

POSTA: A Go-to Framework for Customized Artistic Poster Generation Haoyu Chen*, Xiaojie Xu*, Wenbo Li, Jingjing Ren, Tian Ye, Songhua Liu, Ying-Cong Chen, Lei Zhu, Xinchao Wang CVPR 2025 Paper / Webpage |

Professional Activities

Reviewer

- NeurIPS, ICML, ICLR, CVPR, ICCV, ECCV, AAAI, IJCAI.

- IEEE IJCV, IEEE TIP, IEEE TNNLS, Pattern Recognition.

Seminar Report

-

Ultra-High Resolution Image Synthesis and its Future

at Shanghai AI Laboratory (Info)2024.09

© Jingjing Ren | Last updated: July, 2025.